Resnet 网络

1. Resnet 架构

ResNet原文: Deep Residual Learning for Image Recognition

残差网络

在Abstract中, 就说到更深的网络更难训练,并且会出现退化现象。而残差网络更容易优化,并且增加网络深度能提高准确率。

We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth.

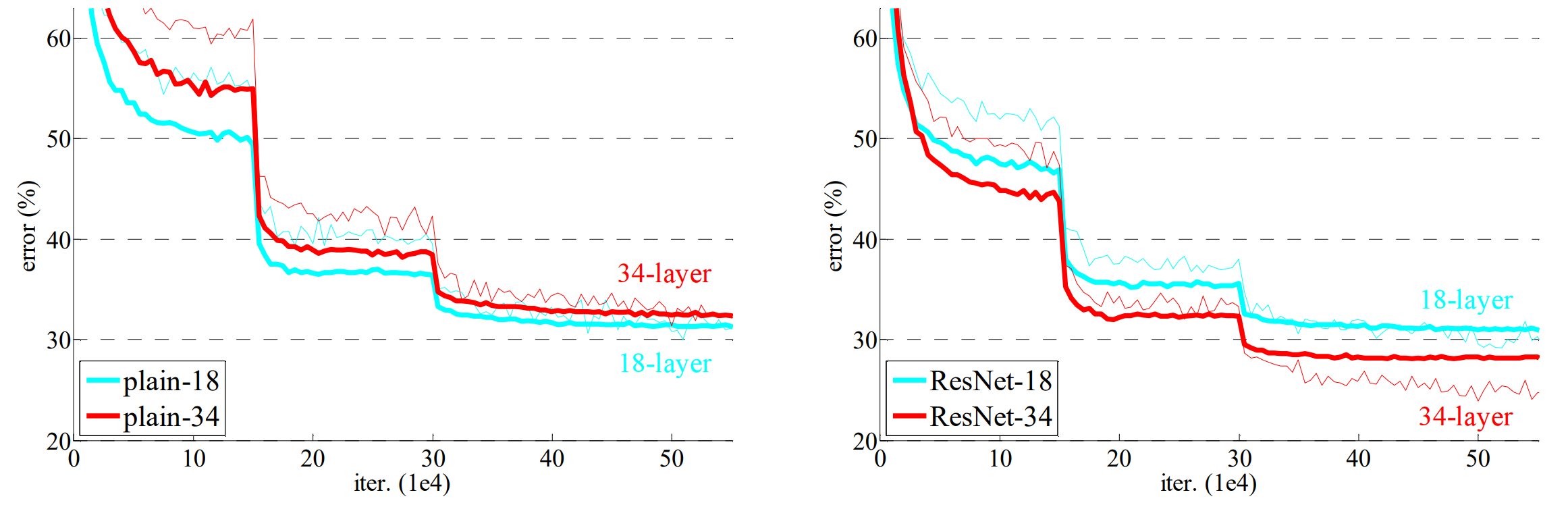

如上图所示,当模型深度增加时,模型的test error却变大了,这就是“退化”问题。

当更深的网络能够开始收敛时,暴露了一个退化问题:随着网络深度的增加,准确率达到饱和(这可能并不奇怪)然后迅速下降。意外的是,这种下降不是由过拟合引起的,并且在适当的深度模型上添加更多的层会导致更高的训练误差。

When deeper networks are able to start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated (which might be unsurprising) and then degrades rapidly. Unexpectedly, such degradation is not caused by overfitting, and adding more layers to a suitably deep model leads to higher training error.

对于退化问题,简单的想法是,如果我们直接把后面的层和浅层直接相连,从效果上来说不应该比浅层网络差。

There exists a solution by construction to the deeper model: the added layers are identity mapping, and the other layers are copied from the learned shallower model. The existence of this constructed solution indicates that a deeper model should produce no higher training error than its shallower counterpart. But experiments show that our current solvers on hand are unable to find solutions that are comparably good or better than the constructed solution (or unable to do so in feasible time).

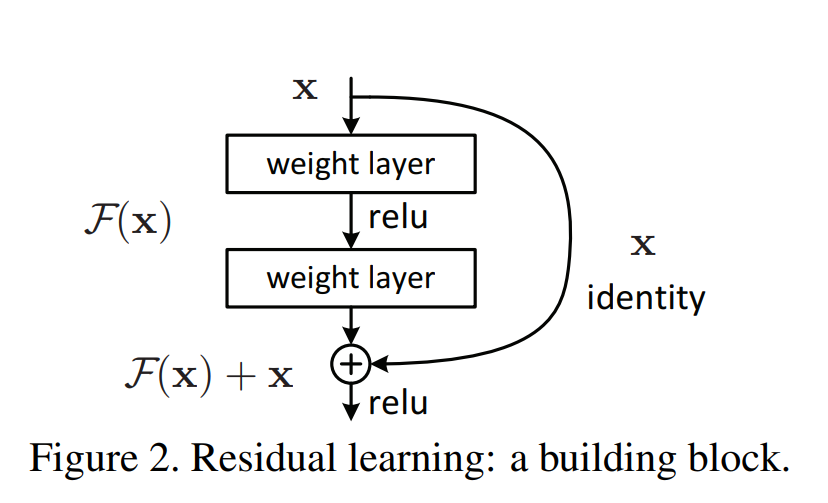

通过引入deep residual learning学习框架解决了退化问题。

在卷积神经网络中, $F(\mathbf{x})$输出维度可能和$\mathbf{x}$不一样。文中按下面两种方式处理:

当维度一致是,二者直接相加,公式:

当维度不一致是, $\mathbf{x}$做一次矩阵变换, 公式:

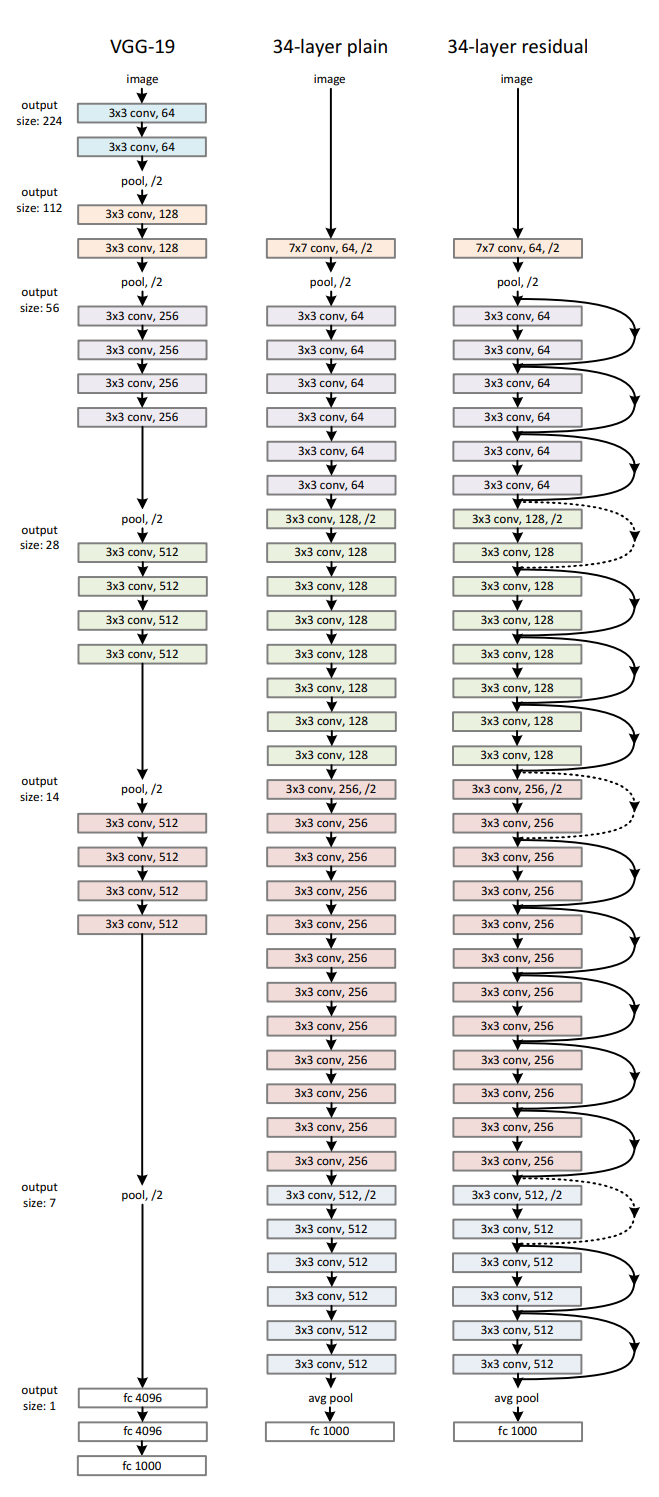

下图中,左边是VGG-19,中间是仿VGG19堆叠的34层网络,记为plain-34,网络更深,但FLOPs(有计算公式) 仅为VGG-19的18%,VGG-19两层全连接层计算量太大。最右边是针对中间加入了跨层连接即残差结构,注意实线就是直接恒等变换和后面的feature map直接相加,就是用公式1,虚线就是由于维度不匹配,用公式2。三个模型计算量分别为:19.6 billion FLOPs、3.6 billion FLOPs、 3.6 billion FLOPs。 残差结构既不增加计算复杂度(除了几乎可以忽略的元素相加),又不增加模型的参数量,同时这也为模型间的比较提供了方便

升维有两种方式:第一种是直接全补0,这样做优势是不会增加网络的参数;第二种是1 x 1卷积升维,后面实验部分会进行比较。

实验

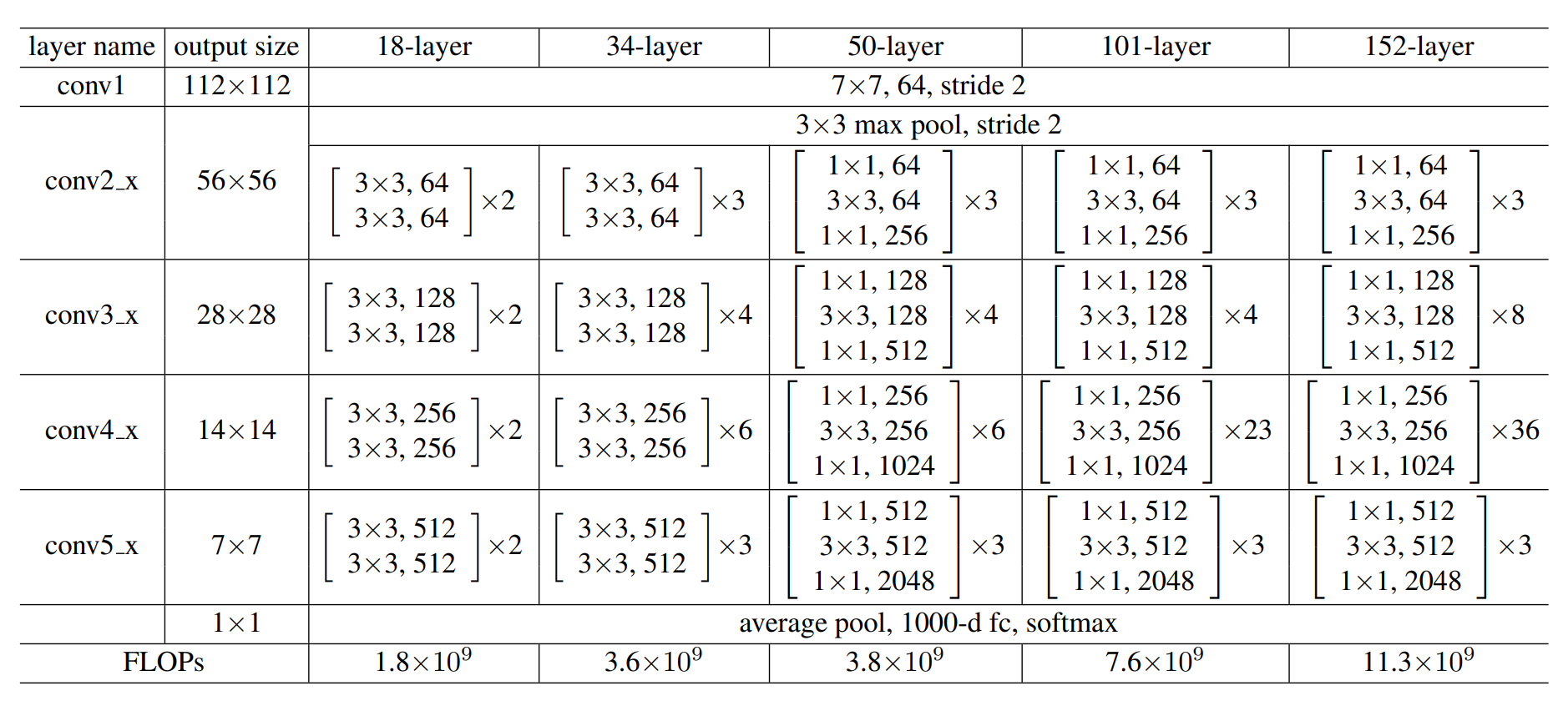

Res18、Res34、Res50、Res101、Res152网络结构如下:

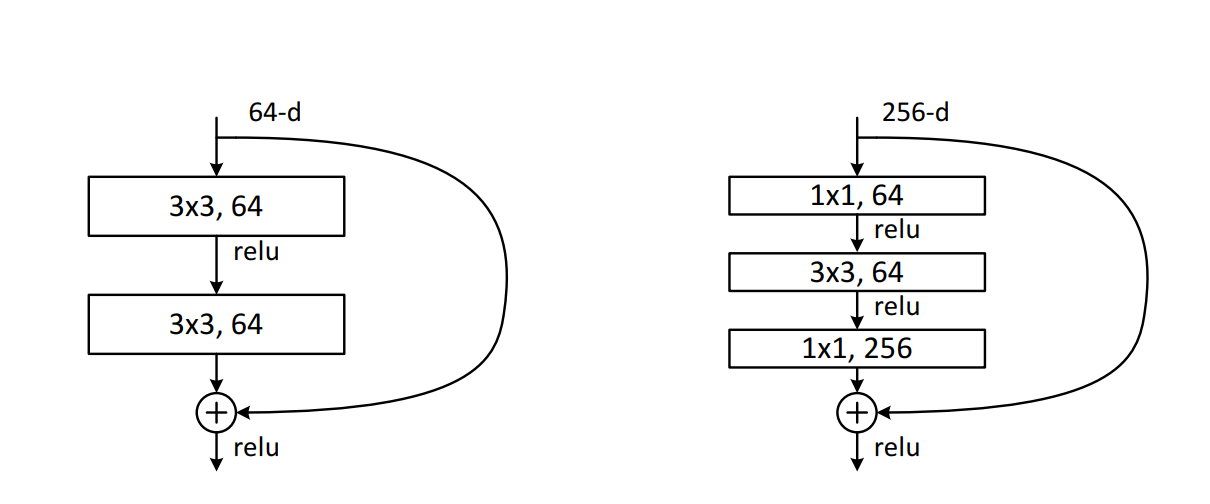

Res50、Res101、Res152采用的是被称为bottleneck的残差结构:

bottleneck结构就是前面先用1 x 1卷积降维,后面再用1 x 1卷积升维以符合维度大小,这样做可以大大减少计算量。注意bottleneck中3 x 3的卷积层只有一个,而不是普通结构的两个。

1x1 小卷积作用:

- 升维或降维

- 通道融合 / 跨通道信息交互

- 保持feature map尺寸不变(不损失分辨率)的情况下增加网络的非线性特性(虽然1 x 1卷积是线性的,但ReLU是非线性的)

下图比较:在ImageNet上训练。细曲线表示训练误差,粗曲线表示中心裁剪图像的验证误差。左:18层和34层的简单网络。右:18层和34层的ResNet。在本图中,残差网络与对应的简单网络相比没有额外的参数。ResNet网络更深,验证误差也能降低,而不跟简单网络一样出现深层网络的退化问题。

训练技巧:

- 图像水平翻转,减去均值,224x224随机裁剪

- 对于跳跃结构,当输入与输出的维度一样时,不需要进行任何处理,二者直接相加;当输入与输出维度不同时,输入要进行变换去匹配输出的维度:zero-padding或1x1卷积

设计网络的规则:对于输出特征图大小相同的层,有相同数量的Filters,即channel数相同。当特征图大小减半时(池化),Filters数量翻倍。

每个卷积后和激活前采用BN

batchsize =256,lr = 0.1,当误差稳定时更新lr = lr * 0.1,SGD优化函数,weight_decay = 0.0001,momentum = 0.9。- 未使用Dropout

- 网络末端以全局平均池化层结束,后接Softmax输出

code

简化的Resnet

1 | import torch.nn as nn |

Reference

[1] ResNet论文翻译——中文版

[2] ResNet论文笔记及代码剖析

[3] ResNet论文总结

[4] ResNet论文和代码解读